April 19, 2024 | Sponsored

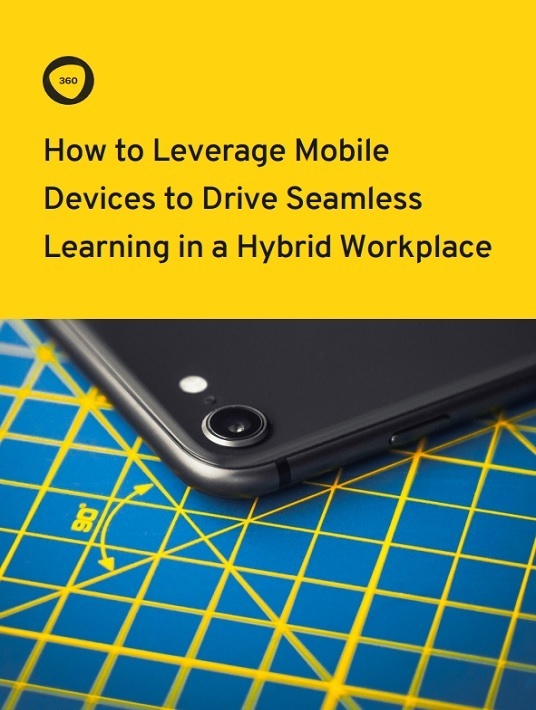

eBook Launch: The 6 Pillars Of A Next-Level Learning Strategy

How do you create an employee development program that ties into your business goals and leverages the latest learning tech? This guide answers that all-important L&D question.

by Christopher Pappas